The Problem

Recently, artificial neural networks (ANNs) have emerged as the most promising candidates for performing a wide range of tasks such as image classification and recognition, object detection,speech recognition, and speech-to-text translation. There have been significant improvements in the classification accuracies of ANNs, and in 2015, ANNs achieved human-level accuracy at the ImageNet 2012 Visual Recognition Challenge. However, the high classification performance of ANNs comes at the expense of a large number of memory accesses and compute operations,which results in higher power and energy consumption.

Spiking neural networks (SNNs) have the potential to be a power-efficient alternative to artificial neural networks (ANNs). SNNs are sparse, access very few weights, and typically use addition operations instead of more power-intensive multiply and accumulate (MAC) operations.

The vast majority of neuromorphic hardware designs support rate-based SNNs, where the information is encoded by spike rates. Generally, rate-based SNNs can be inefficient as a large number of spikes will be transmitted and processed during inference. A more efficient encoding scheme is the time-to-first-spike (TTFS) encoding, where the information is encoded in the relative time of arrival of spikes. In TTFS-based SNNs, each neuron can only spike once during the entire inference process and this results in high sparsity. While TTFS-based SNNs are more sparse than rate-based SNNs, they have yet to achieve the same accuracy as rate-based SNNs.

What we did

The main objective of our work was to accelerate the inference of TTFS-based SNNs on low-power devices, with minimal loss to accuracy. Therefore, this work focused on (1) improving the classification performance of TTFS-based SNNs, and (2) designing a low-power neuromorphic hardware accelerator for performing inference of TTFS-based SNNs. The main contributions of this work can be listed as follows:

- A new training algorithm that reduces the errors accumulated as a result of converting pre-trained neural network models to SNNs.

- A novel low-power neuromorphic architecture, YOSO, designed to accelerate the inference operations of TTFS-based SNNs.

- An end-to-end neuromorphic technique that demonstrates the state-of-the-art performance and accuracy for TTFS-based SNNs.

Take Aways

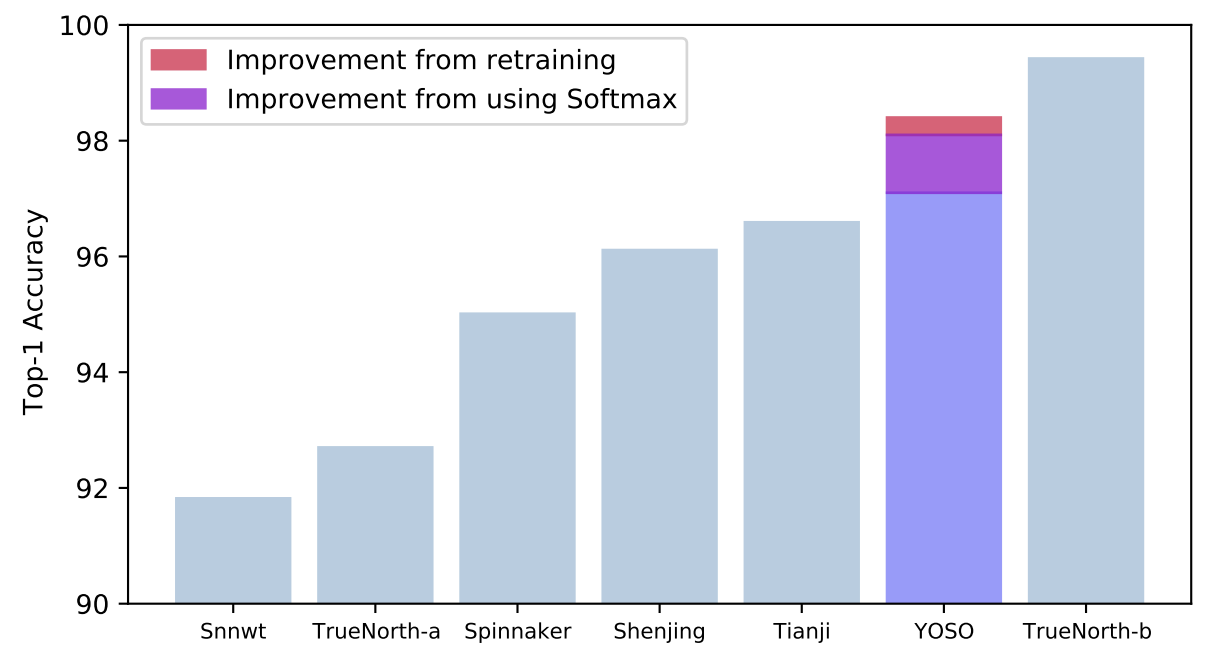

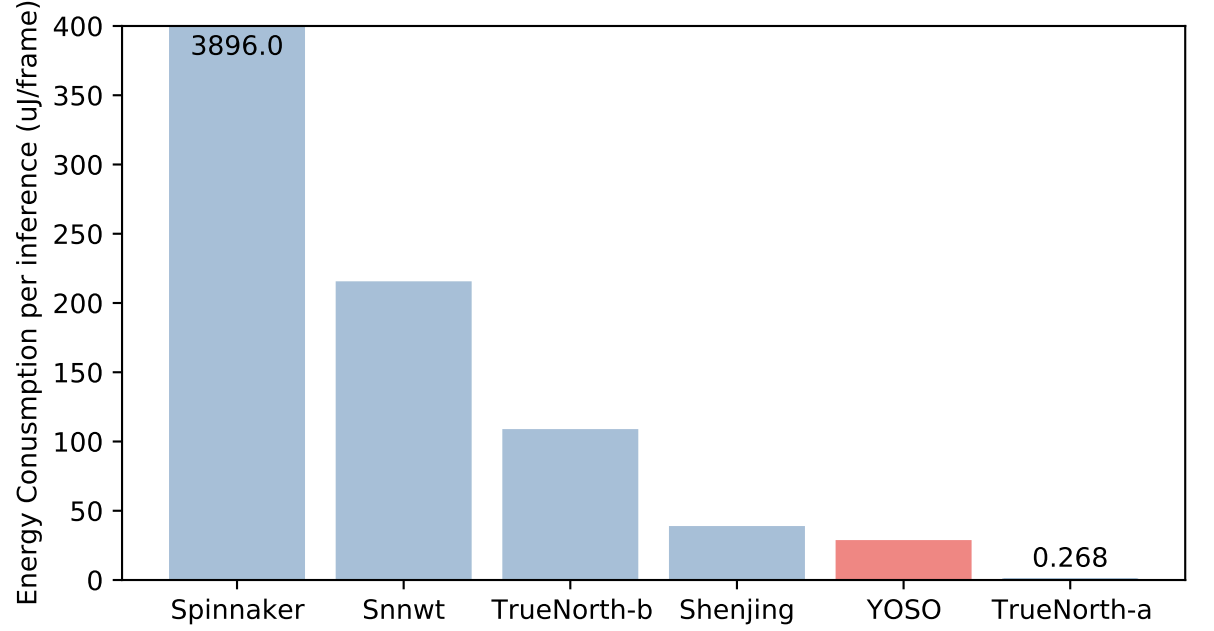

The YOSO accelerator is the only one that achieves both high accuracy and low-energy consumption at the same time.

TrueNorth-b consumes almost 3.83x more energy than YOSO to achieve its

higher accuracy, while TrueNorth-a gives up a significant amount of accuracy

(92.70% vs. 98.40% for our work) to achieve lower energy consumption.

More details can be found in our paper here.