Link to full paper (Accepted to International Conference on Machine Learning (ICML) Workshop 2021)

The Problem

Data-efficiency and generalization are key challenges in deep learning and deep reinforcement learning as many models are trained on large scale, domain-specific, and expensive-to-label datasets. Self-supervised models trained on large scale uncurated datasets have shown successful transfer to diverse settings.

What we did

-

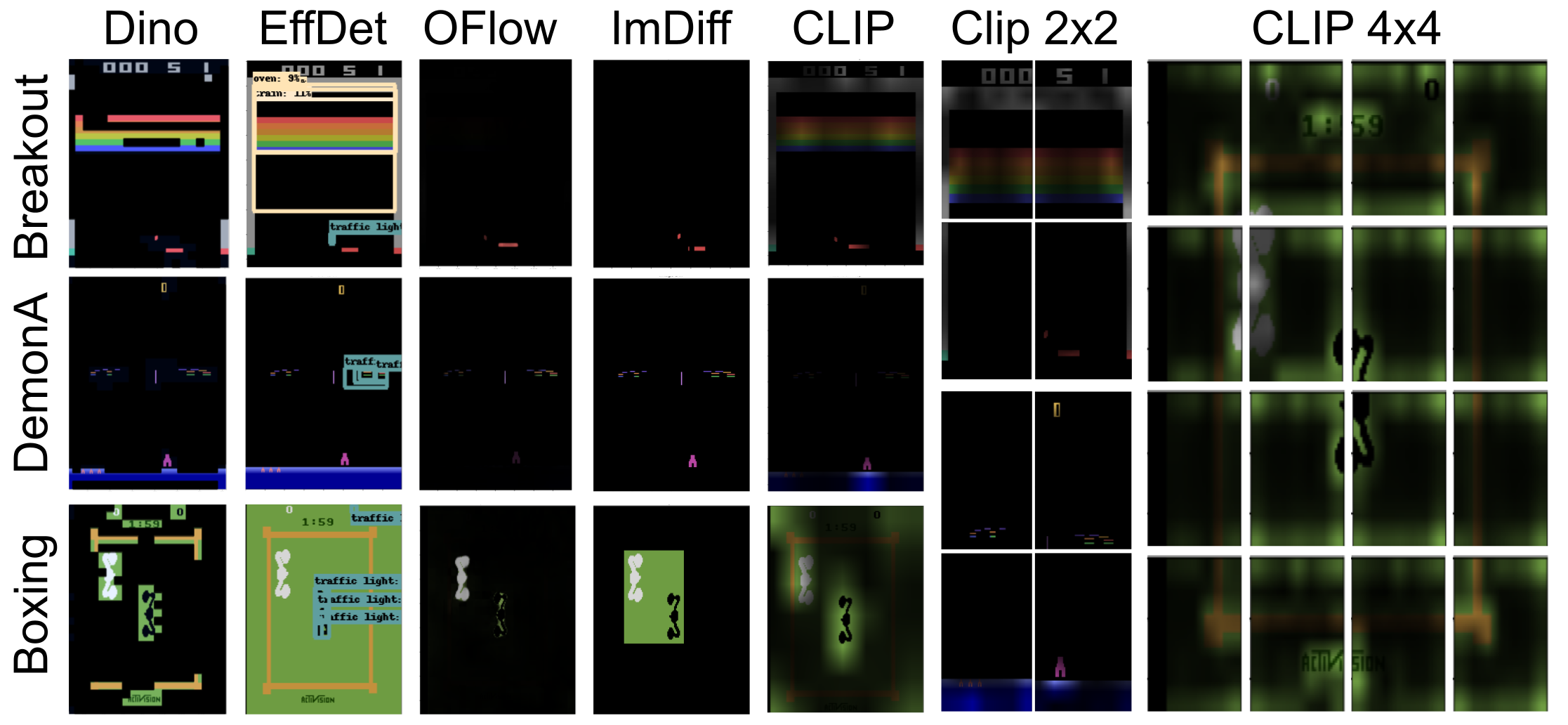

We investigated the use of pretrained image representations and spatiotemporal attention for state representation learning in Atari.

-

We also explored fine-tuning pretrained representations with self-supervised techniques, i.e., contrastive predictive coding, spatiotemporal contrastive learning, and augmentations.

Take Aways

Our results show that pretrained self-supervised models, not trained on domain-specific data, give competitive performance compared to state-of-the-art self-supervised models, trained on large-scale domain-specific data. Thus, pretrained models enable data-efficient and generalizable state representation learning for RL.

Moreover, our framework, PEARL (Pretrained Encoder and Attention for Representation Learning), investigates not only using pretrained image representations but also pretrained spatio-temporal attention. Our pretrained image representation model also uses attention and our results show that attention helps state representation learning.

More details can be found in our paper here.