The Problem

In recent years, along with the rise of machine learning across a wide plethora of applications, we see massive troves of data being collected from individuals. Be it users’ online activity or diagnosis reports, large amounts of sensitive data are collected by a growing number of institutions, ranging from hospitals and government bodies to private companies and research organizations. This has ushered growing concerns associated with a potential loss of user privacy.

What we did

With growing concerns over privacy, this work aims to study privacy preservation techniques using the differential privacy framework and, draw comparisons between medical and non-medical datasets from a privacy standpoint. We compare the use of Differentially Private Stochastic Gradient Descent (DP-SGD) and Private Aggregation of Teacher Ensembles (PATE) on three publicly available datasets.

Take Aways

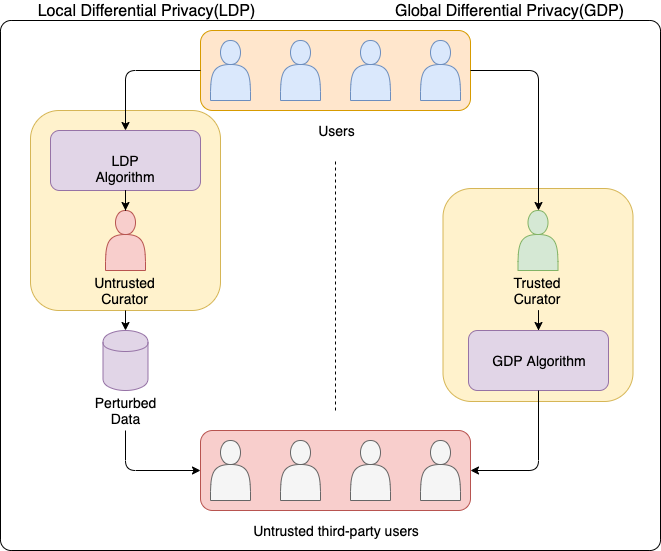

In the past decade, Differential Privacy has become the de-facto framework for performing privacy analysis. There are numerous relaxations of Differential Privacy with the most prominent ones being epsilon,delta-Differential Privacy (DP) and R'enyi Differential Privacy (RDP).

- Healthcare datasets are usually plagued with missing values and class imbalance problems. It was found that these factors affected the utility privacy trade-off significantly.

- The most important factors were found to be the complexity of the dataset and the type of noise applied.

More details can be found in the paper here.